你的位置:骚妹妹 > www.henhenlu > 一路向西2 为什么机器阅读合股模子学习捷径?

发布日期:2024-11-01 12:27 点击次数:159

1. 序言机器阅读合股(MRC)任务通骚扰答的形式来揣度模子是否合股了天然言语文本,自BERT出现以来,许多基于预教练的MRC模子在一些benchmark数据集上接近以致越过了human performance,以至于不少论文无间会在论断中表述模子“合股(comprehend)”了文本的“含义(meaning)”。

可是深度学习模子终究只是统计模子,现时的MRC模子实质上只是是通过复杂的函数来拟合文本中的统计陈迹,从而展望谜底良友,作家指出只是在文本全国中构建模子而不与真实全国树立关系的话,模子弥远只可学到”form",学不到"meaning"。

因此近两年也出现了不少分析、批判与反想现时MRC模子存在的问题的论文,其中What Makes Reading Comprehension Questions Easier?指出现时的MRC模子其实并莫得以咱们意料的形势来推理谜底,MRC模子会学到许多捷径(shortcuts),或者说是一些不问可知的规定。

比如通过位置信息来寻找谜底,因为SQuAD的谜底大多围聚于整篇著述的第一句话,是以MRC模子会倾向于展望谜底或者率在第一句话中,当咱们把第一句话移到文末时,模子的发扬就会急剧下落。也曾有一些论文会将谜底的位置信息动作MRC模子的输入特征,天然东谈主类在进行阅读合股时,推导谜底的位置并不组成“合股”。

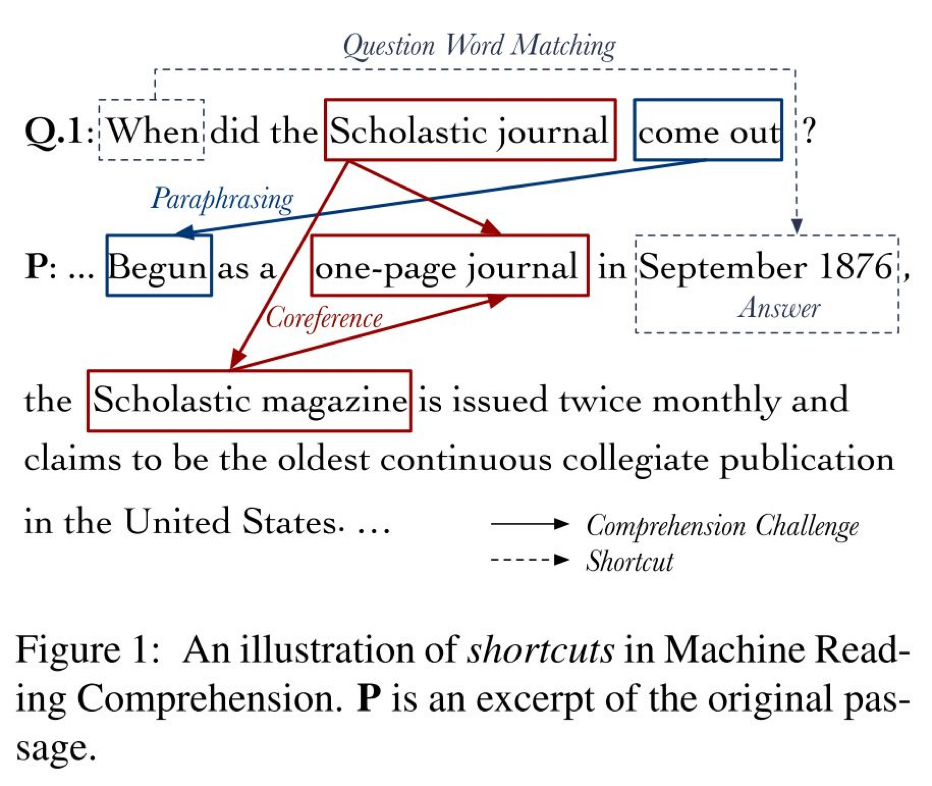

图1是一个肤浅的例子,咱们但愿MRC模子不错合股come out和begun之间的语义一致性,并通过树立Scholastic journal,Scholastic magazine以及one-page journal之间的共指关系(co-reference)来推导出正确谜底是September 1876。

但执行上,模子不错径直识别出September 1876是统统片断中唯独不错回话When类问题的时候实体,也即是仅通过肤浅的疑问词匹配就不错正确回话问题,而不需要共指消解之类的复杂推导。

图1数据示例

诓骗这种肤浅的陈迹推导出的谜底天然是不能靠的,如若文本中出现了两个时候实体,MRC模子很可能就不知谈哪个时候实体是谜底了。

由于捷径的存在,模子不错无用的确合股问题和片断的含义就推断出谜底,比如把问题或片断的伏击部分去掉以至于问题变得不能回话之后,MRC模子仍旧能够给出正确谜底,这标明现时的MRC模子是突出脆弱的,并莫得的确揣度所谓的“阅读合股”才气。

MRC模子走捷径的举止其实和东谈主类有几分雷同,咱们在测验的时候遭逢一个不会的题,总会去寻找一些无关陈迹来推导谜底,比如三短一长选最长,错乱不皆就选C,以及数学测验中常见的摒除法、特值法、估算法等。但咱们在学习学问的过程中并不会接管这些技艺,因为这些技艺并不是的确的学问。

天然一经有许多论文确认了捷径得志的存在,同期也建议了一些意见来缓解这个问题,但还莫得论文尝试探讨MRC模子是若何学到这些捷径技艺的,本文但愿不错找到一个定量的形势来分析模子学习捷径问题和非捷径问题的内在机制。

2. 数据集构建为了更好地议论这个问题,咱们遭逢的第一个艰难即是现在还莫得一个数据集包含教练样本是否存在捷径的标签,因此很难分析模子到底在多猛进程上受到了捷径样本的影响,也很难分析MRC模子在回话问题时是否确实走了捷径。

本文以SQuAD数据集为基础,通过辩别野心两个合成的MRC数据集来治理上述问题,在这两个数据围聚,每个样本包含一个原样本(passage,question)的捷径版块(shortcut version) 和挑战版块(challenging version),在构建数据集的时候,咱们需要保证两个版块在长短、立场、主题、词表、谜底类型等方面保捏一致,从而保证捷径的存在与否是唯独的零丁变量,终末,作家在这两个数据集上进行个实验来分析了捷径问题对MRC模子性能和学习过程的影响。

在数据集的捷径版块中,本文洽商两种捷径:疑问词匹配(question word matching, QWM)和肤浅匹配(simple matching, SpM),QWM是指模子不错通过识别疑问词类型来匹配谜底,SpM是指模子不错通过谜底地点的句子和问题的词汇重复来匹配谜底。

本文在SQuAD数据集的基础上构造上述两个数据集,通过back-translation来取得释义句,终末得到的QWM-Para数据集和SpM-Para数据集的教练/测试集的大小辩别为6306/766和7562/952,底下肤浅教学数据集的构建过程,更醒目的构建细节可参考原文。

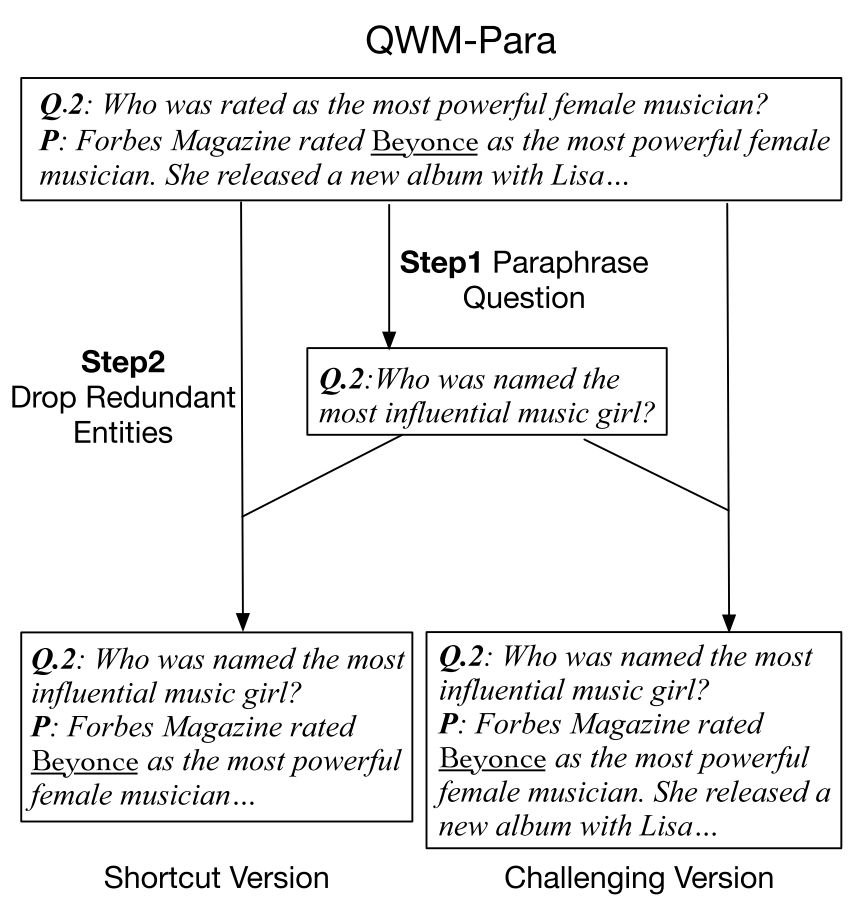

图2是QWM-Para数据集的构建过程,以下图为例,在捷径版块中,模子不错径直通过疑问词Who与唯独的东谈主物实体Beyonce的匹配来推断出谜底是Beyonce。而在挑战版块中,另一个东谈主物实体Lisa组成了干扰项,这不错幸免模子通过肤浅的疑问词匹配的捷径来推断谜底,从而期许模子不错识别出named the most influential music girl和rated as the most powerful female musician之间的释义关系。

图2 QWM-Para数据集的构建过程

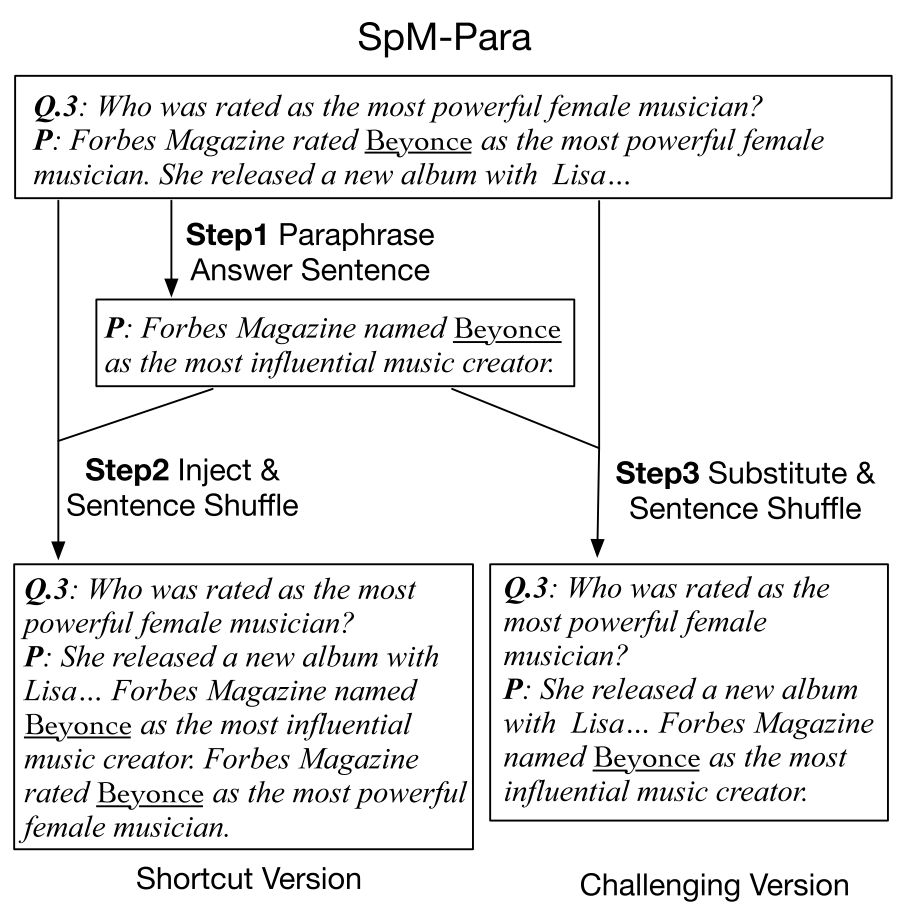

图3是SpM-Para数据集的构建过程,鄙人例的捷径版块中,模子不错通过肤浅的词汇匹配rated as the most powerful female musician来取得谜底Beyonce。在挑战版块中,咱们只提供了原文的释义版块,从而幸免了模子通过肤浅的词汇匹配取得谜底,这对模子的释义才气建议了条款。

图3 SpM-Para数据集的构建过程

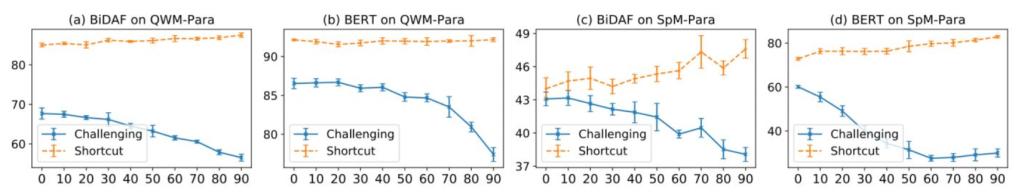

3. 捷径问题若何影响模子发扬?模子是若何学习到捷径技艺的?测度:因为数据围聚的大部分问题都是捷径样本,因此模子会优先学习捷径技艺。考证:通过不雅察使用不同比例的捷径样本教练出的模子辩别在捷径测试集和挑战测试集上的发扬,来信托模子在多猛进程上受到了捷径样本的影响。

作家教练了两个经典的MRC模子:BiDAF和BERT,如下表1所示,当咱们将数据围聚的捷径问题的比例从0%增多到90%时,增多捷径样本仅能带来很小的进步,但却会让模子在挑战样本上的发扬大幅下落,这标明捷径问题的存在袒护了模子对著述内容的学习。

表1 实验成果

咱们不错谨慎到,当教练集的捷径样本和挑战样本的比例为1:1时,MRC模子如故会在捷径问题上取得更好的发扬,这标明模子倾向于优先拟合捷径样本,这标明学习词汇匹配比学习释义要肤浅得多。为了考证这个想法,作家辩别在捷径数据集和挑战数据集上教练MRC模子,然后相比在教练集上达到同等水平所需要的迭代次数和参数目。咱们不错发现MRC模子在捷径数据集上教练的迭代次数要更少,同期所需要的参数目也更少,这标明释义才气的确是更难学到的。

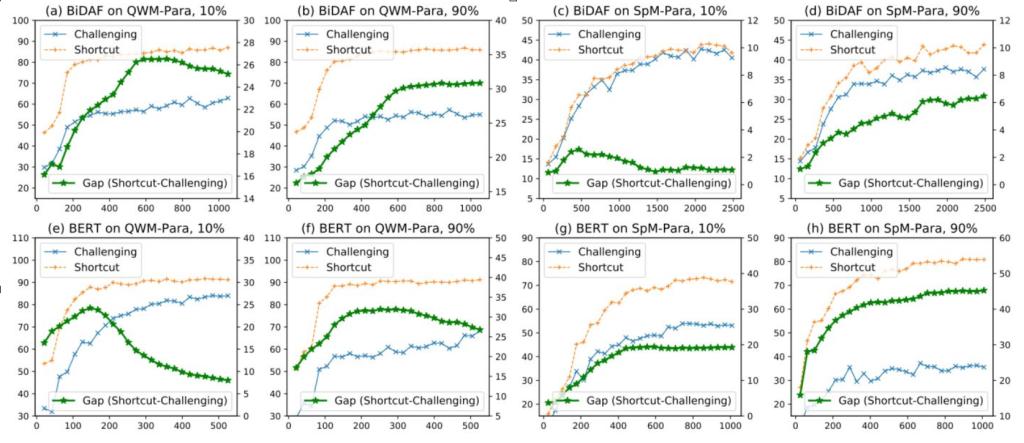

4. 模子若何学习捷径?前边本文通过实考评释捷径样本如实更容易被拟合,模子更倾向于优先学习捷径技艺。意料:在教练的早期阶段,捷径样本所给出的梯度是更明确的(方差更小),因此模子更倾向于向拟合捷径样本的主义作念梯度下落,可是在教练后期,模子将受困于捷径技艺的局部最优解,无法进一步学习更难的释义才气。考证:吞并个MRC模子在捷径数据集和挑战数据集上的发扬差距越大,咱们就不错以为该模子学到了更多的捷径技艺,基于此,作家尝试辩别在包含10%捷径样本和90%捷径样本的教练集上教练MRC模子。

表2 实验成果

到了教练的中后期阶段,当教练集只包含10%的捷径样本时,这一互异转而会稳重下落,这标明模子运行更多地学习更难的释义技艺,此时挑战样本对梯度的孝敬变得更为昭着。但如若教练集包含了90%的捷径样本,这一互异会趋于牢固,这说明模子的学习道路依旧被捷径样本所主导,模子无法通过仅有的10%的挑战样本学习释义技艺。

即少数未治理的挑战性样本无法引发模子去学习更复杂的释义技艺。

5. 论断本文回话了为什么许多MRC模子学习shortcuts技艺,而刻毒comprehension challenges。领先野心了两个数据集,其中每个实例都有一个肤浅的版块,另一个具有挑战性的版块需要较为复杂的推理技艺走动话,而不是按问题进行单词匹配或肤浅匹配。

本文发现learning shortcut questions无间需要较少的筹谋资源,而MRC模子无间在教练的早期阶段学习shortcut questions。跟着教练中shortcut问题的比例越来越大,MRC模子将在忽略challenge questions的同期快速学习shortcut questions。

参考文件[1] Max Bartolo, A. Roberts, Johannes Welbl, Sebastian Riedel, and Pontus Stenetorp. 2020. Beat the ai: Investigating adversarial human annotation for reading comprehension. Transactions of the Association for Computational Linguistics, 8:662–678.

[2] Danqi Chen, Jason Bolton, and Christopher D Manning. 2016. A thorough examination of the cnn/daily mail reading comprehension task. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers),pages 2358–2367.

[3] Christopher Clark, Kenton Lee, Ming-Wei Chang,Tom Kwiatkowski, Michael Collins, and KristinaToutanova. 2019. BoolQ: Exploring the surprising difficulty of natural yes/no questions. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1(Long and Short Papers), pages 2924–2936, Minneapolis, Minnesota. Association for Computational Linguistics.

[4] Pradeep Dasigi, Nelson F. Liu, Ana Marasovic,´Noah A. Smith, and Matt Gardner. 2019. Quoref: A reading comprehension dataset with questions requiring coreferential reasoning. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 5925–5932, Hong Kong,China. Association for Computational Linguistics.

[5] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186.

[6] Li Dong, Jonathan Mallinson, Siva Reddy, and Mirella Lapata. 2017. Learning to paraphrase for question answering. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 875–886.

[7] Dheeru Dua, Yizhong Wang, Pradeep Dasigi, Gabriel Stanovsky, Sameer Singh, and Matt Gardner. 2019. Drop: A reading comprehension benchmark requiring discrete reasoning over paragraphs. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 2368–2378.

[8] Michael Glass, Alfio Gliozzo, Rishav Chakravarti, Anthony Ferritto, Lin Pan, G P Shrivatsa Bhargav, Dinesh Garg, and Avi Sil. 2020. Span selection pretraining for question answering. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 2773–2782, Online. Association for Computational Linguistics.

[9] Xanh Ho, Anh-Khoa Duong Nguyen, Saku Sugawara, and Akiko Aizawa. 2020. Constructing a multihop QA dataset for comprehensive evaluation of reasoning steps. In Proceedings of the 28th International Conference on Computational Linguistics, pages 6609–6625, Barcelona, Spain (Online). International Committee on Computational Linguistics.

[10] Harsh Jhamtani and Peter Clark. 2020. Learning to explain: Datasets and models for identifying valid reasoning chains in multihop question-answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP),pages 137–150, Online. Association for Computational Linguistics.

[11] Robin Jia and Percy Liang. 2017. Adversarial examples for evaluating reading comprehension systems. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 2021–2031.

[12] Yichen Jiang and Mohit Bansal. 2019. Avoiding reasoning shortcuts: Adversarial evaluation, training, and model development for multi-hop QA. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 2726–2736, Florence, Italy. Association for Computational Linguistics.

[13] Dimitris Kalimeris, Gal Kaplun, Preetum Nakkiran, Benjamin L Edelman, Tristan Yang, Boaz Barak, and Haofeng Zhang. 2019. SGD on neural networks learns functions of increasing complexity. In Advances in Neural Information Processing Systems32: Annual Conference on Neural Information Processing Systems 2019.

[14] Divyansh Kaushik and Zachary C Lipton. 2018. How much reading does reading comprehension require? a critical investigation of popular benchmarks. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 5010–5015.

[15] Tom Kwiatkowski, Jennimaria Palomaki, Olivia Redfield, Michael Collins, Ankur Parikh, Chris Alberti, Danielle Epstein, Illia Polosukhin, Jacob Devlin, Kenton Lee, Kristina Toutanova, Llion Jones, Matthew Kelcey, Ming-Wei Chang, Andrew M. Dai, Jakob Uszkoreit, Quoc Le, and Slav Petrov. 2019.Natural questions: A benchmark for question answering research. Transactions of the Association for Computational Linguistics, 7:453–466.

[16] Yuxuan Lai, Yansong Feng, Xiaohan Yu, Zheng Wang, Kun Xu, and Dongyan Zhao. 2019. Lattice cnns for matching based chinese question answering. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pages 6634–6641.

[17] Christopher D. Manning, Mihai Surdeanu, John Bauer,Jenny Finkel, Steven J. Bethard, and David McClosky. 2014. The Stanford CoreNLP natural language processing toolkit. In Association for Computational Linguistics (ACL) System Demonstrations, pages 55–60.

[18] Sewon Min, Eric Wallace, Sameer Singh, Matt Gardner, Hannaneh Hajishirzi, and Luke Zettlemoyer. 2019. Compositional questions do not necessitate multi-hop reasoning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 4249–4257, Florence, Italy. Association for Computational Linguistics.

[19] Seo Minjoon, Kembhavi Aniruddha, Farhadi Ali, and Hajishirzi Hannaneh. 2017. Bidirectional attention flow for machine comprehension. In International Conference on Learning Representations

[20] Pramod Kaushik Mudrakarta, Ankur Taly, Mukund Sundararajan, and Kedar Dhamdhere. 2018. Did the model understand the question? In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1896–1906.

[21] Liang Pang, Yanyan Lan, Jiafeng Guo, Jun Xu, Lixin Su, and Xueqi Cheng. 2019. Has-qa: Hierarchical answer spans model for open-domain question answering. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pages 6875–6882.

[22] Jeffrey Pennington, Richard Socher, and Christopher D. Manning. 2014. Glove: Global vectors for word representation. In Empirical Methods in Natural Language Processing (EMNLP), pages 1532–1543.

[23] Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and Percy Liang. 2016. Squad: 100,000+ questions for machine comprehension of text. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 2383–2392.

[24] Siva Reddy, Danqi Chen, and Christopher D Manning. 2019. Coqa: A conversational question answering challenge. Transactions of the Association for Computational Linguistics, 7:249–266.

哥要色[25] Chenglei Si, Shuohang Wang, Min-Yen Kan, and Jing Jiang. 2019. What does bert learn from multiplechoice reading comprehension datasets? ArXiv, abs/1910.12391.

[26] Saku Sugawara, Kentaro Inui, Satoshi Sekine, and Akiko Aizawa. 2018. What makes reading comprehension questions easier? In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 4208–4219.

[27] Saku Sugawara, Pontus Stenetorp, Kentaro Inui, and Akiko Aizawa. 2020. Assessing the benchmarking capacity of machine reading comprehension datasets. In Thirty-Fourth AAAI Conference on Artificial Intelligence.

[28] Adam Trischler, Tong Wang, Xingdi Yuan, Justin Harris, Alessandro Sordoni, Philip Bachman, and Kaheer Suleman. 2017. Newsqa: A machine comprehension dataset. In Proceedings of the 2nd Workshop on Representation Learning for NLP, pages191–200.

[29] Eric Wallace, Shi Feng, Nikhil Kandpal, Matt Gardner, and Sameer Singh. 2019. Universal adversarial triggers for attacking and analyzing NLP. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 2153–2162, Hong Kong, China. Association for Computational Linguistics.

[30] Dirk Weissenborn, Georg Wiese, and Laura Seiffe. 2017. Making neural QA as simple as possiblebut not simpler. In Proceedings of the 21st Conference on Computational Natural Language Learning (CoNLL 2017), pages 271–280, Vancouver, Canada. Association for Computational Linguistics.

[31] Jindou Wu, Yunlun Yang, Chao Deng, Hongyi Tang, Bingning Wang, Haoze Sun, Ting Yao, and

[32] Qi Zhang. 2019. Sogou machine reading comprehension toolkit. arXiv preprint arXiv:1903.11848.

[33] Kun Xu, Yansong Feng, Songfang Huang, and Dongyan Zhao. 2016. Hybrid question answering

over knowledge base and free text. In Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pages 2397–2407, Osaka, Japan. The COLING 2016 Organizing Committee.

[34] Zhilin Yang, Peng Qi, Saizheng Zhang, Yoshua Bengio, William Cohen, Ruslan Salakhutdinov, and Christopher D Manning. 2018. Hotpotqa: A dataset for diverse一路向西2, explainable multi-hop question answering. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 2369–2380